|

OGRE-Next 3.0.0

Object-Oriented Graphics Rendering Engine

|

|

OGRE-Next 3.0.0

Object-Oriented Graphics Rendering Engine

|

Here is a list of all the samples provided with Ogre Next and the features that they demonstrate. They are seperated into three categories: Showcases, API usage & Tutorials. The samples can be downloaded as a binary package from github.

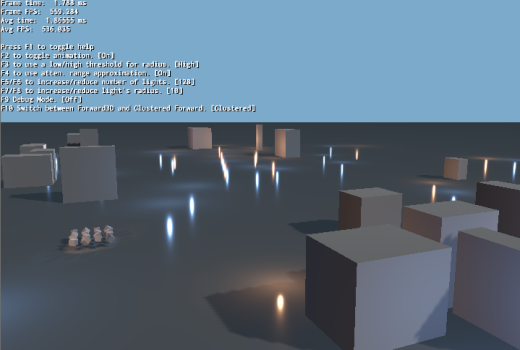

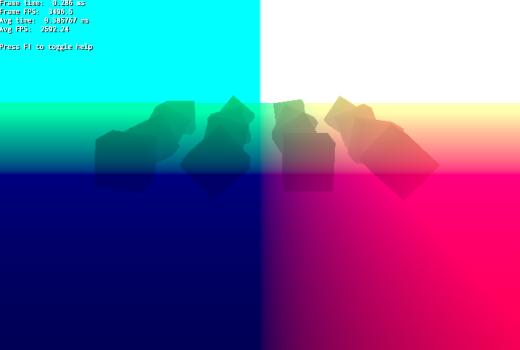

This sample demonstrates Forward3D & Clustered techniques. These techniques are capable of rendering many non shadow casting lights.

This sample demonstrates the HDR (High Dynamic Range) pipeline in action. HDR combined with PBR let us use real world values as input for our lighting and a real world scale such as lumen, lux and EV Stops.

This sample demonstrates HDR in combination with SMAA.

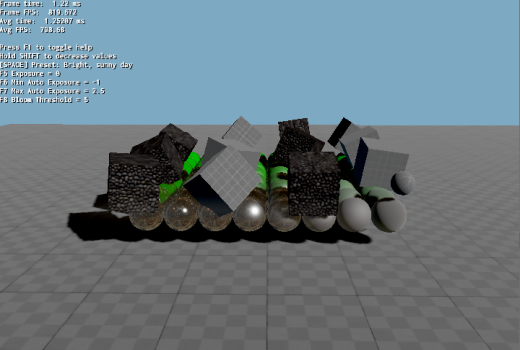

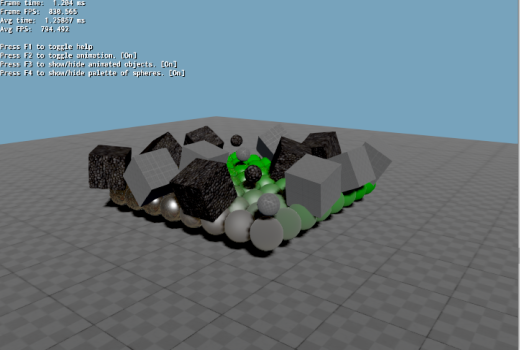

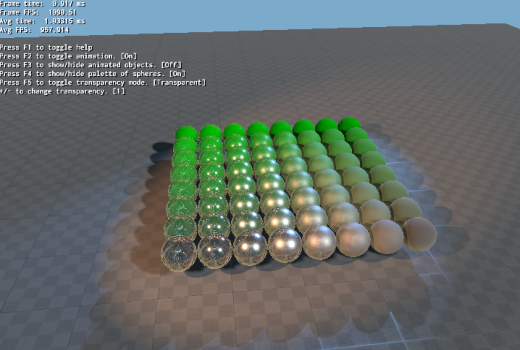

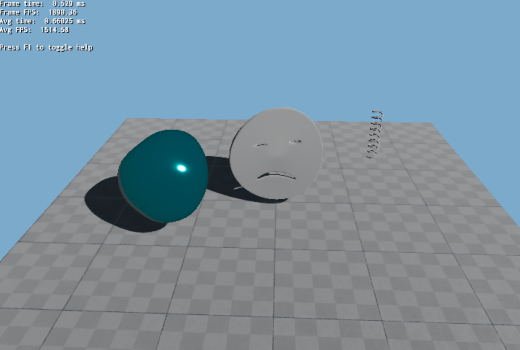

This sample demonstrates the PBS material system.

This sample demonstrates using the compositor for postprocessing effects.

This sample demonstrates multiple ways in which TagPoints are used to attach to bones.

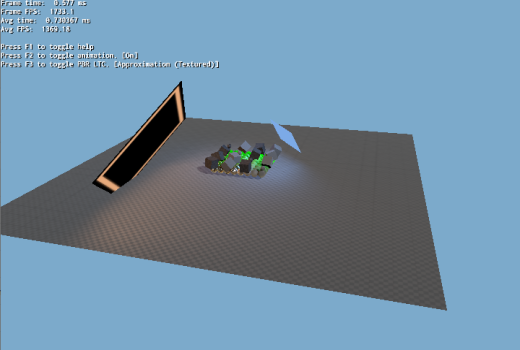

This sample demonstrates area light approximation methods.

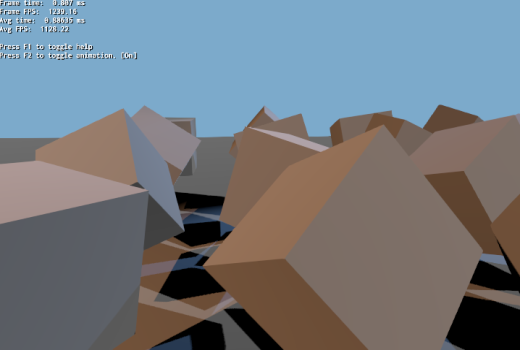

This sample demonstrates creating a custom class derived from both MovableObject and Renderable for fine control over rendering. Also see related DynamicGeometry sample.

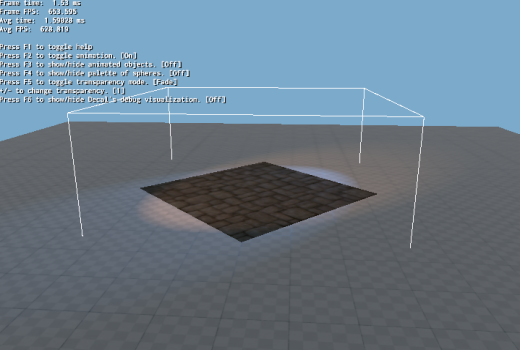

This sample demonstrates screen space decals.

This sample demonstrates creating a Mesh programmatically from code and updating it.

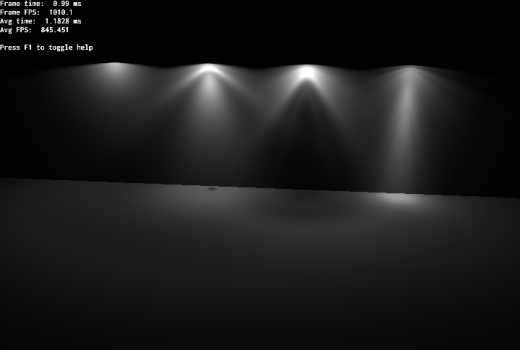

This sample demonstrates the use of IES photometric profiles.

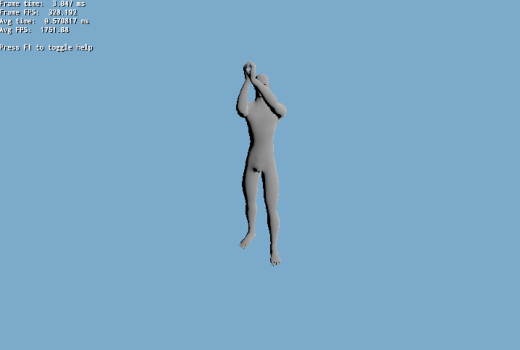

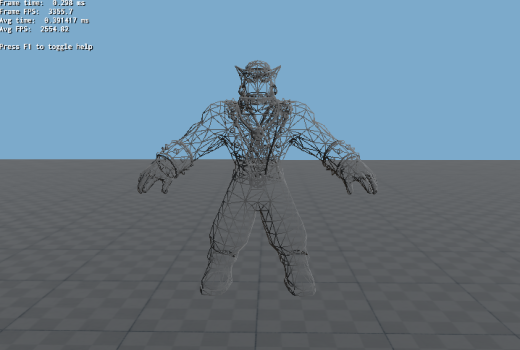

This sample demonstrates the importing of animation clips from multiple .skeleton files directly into a single skeleton from a v2Mesh and also how to share the same skeleton instance between components of the same actor/character. For example, an RPG player wearing armour, boots, helmets, etc. In this sample, the feet, hands, head, legs and torso are all separate items using the same skeleton.

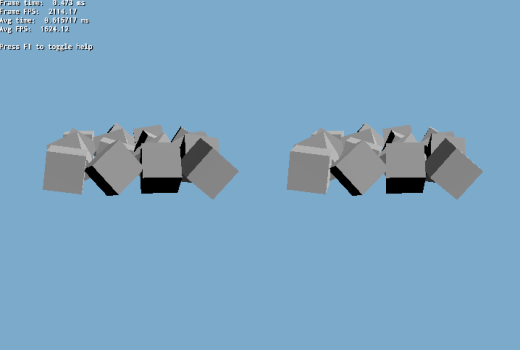

This sample demonstrates instanced stereo rendering. Related to VR.

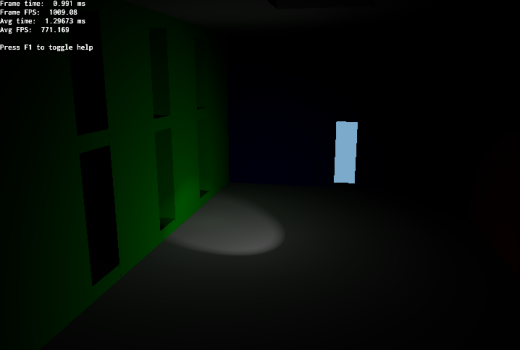

This sample demonstrates the use of 'Instant Radiosity' (IR). IR traces rays in CPU and creates a VPL (Virtual Point Light) at every hit point to mimic the effect of Global Illumination.

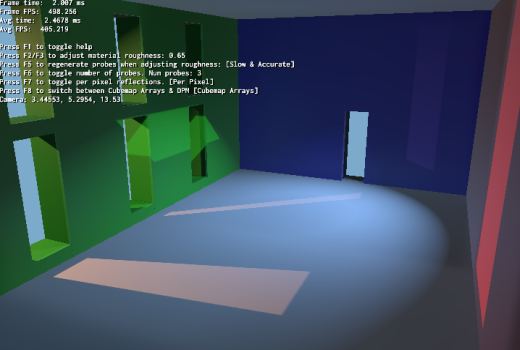

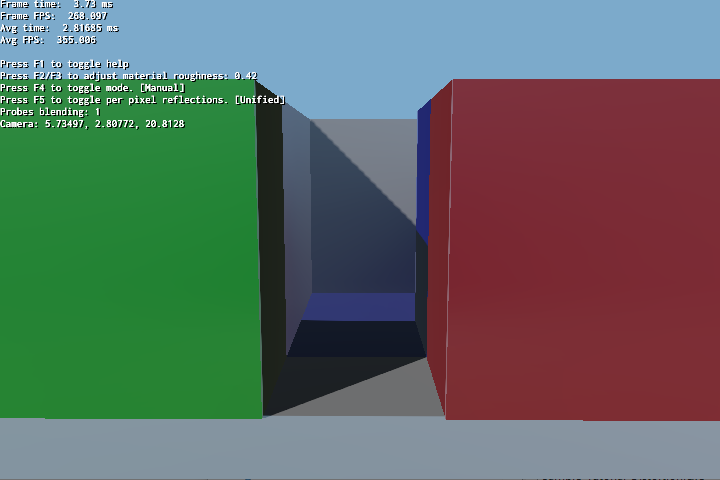

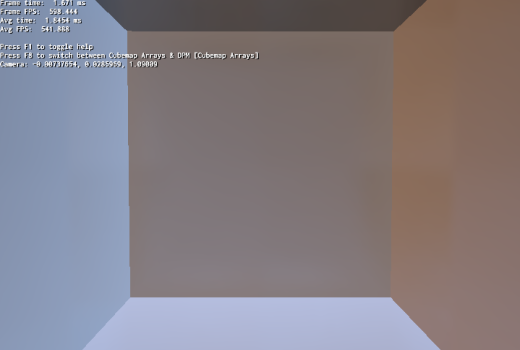

This sample demonstrates using parallax reflect cubemaps for accurate local reflections.

This sample demonstrates using parallax reflect cubemaps for accurate local reflections. This time, we showcase the differences between manual and automatic modes. Manual probes are camera independent and work best for static objects.

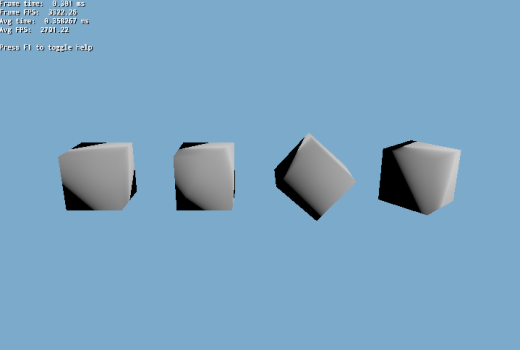

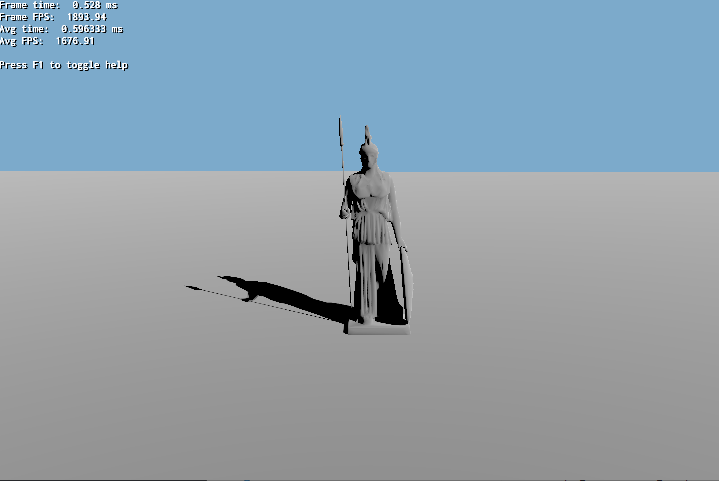

This sample demonstrates the automatic generation of LODs from an existing mesh.

This sample demonstrates morph animations.

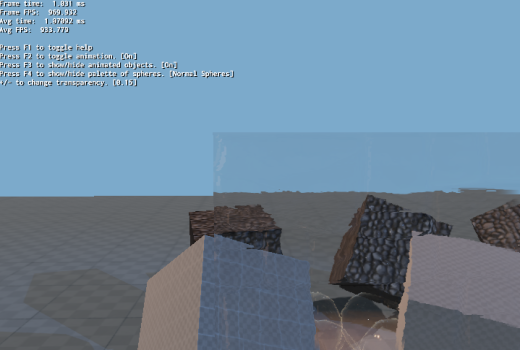

This sample demonstrates placing multiple PCC probes automatically.

This sample demonstrates planar reflections.

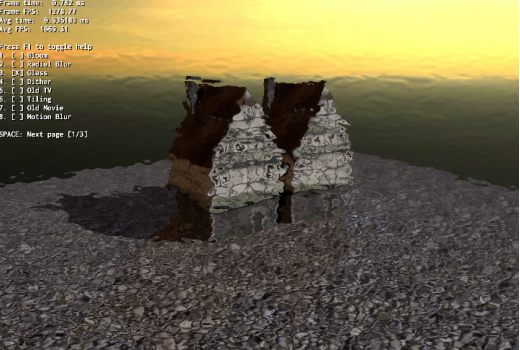

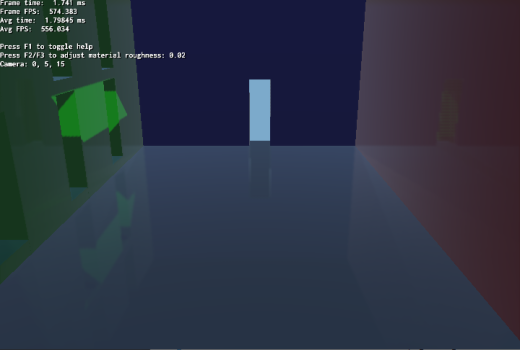

This sample demonstrates refractions.

This sample demonstrates exporting/importing of a scene to JSON format. Includes the exporting of meshes and textures to a binary format.

This sample demonstrates screen space reflections

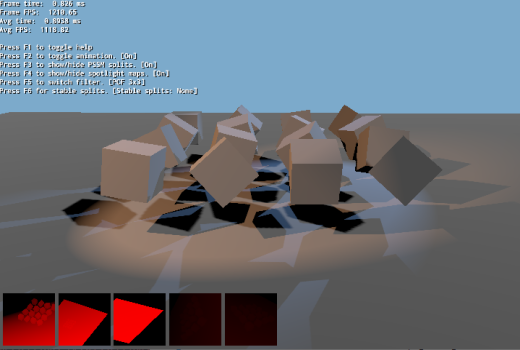

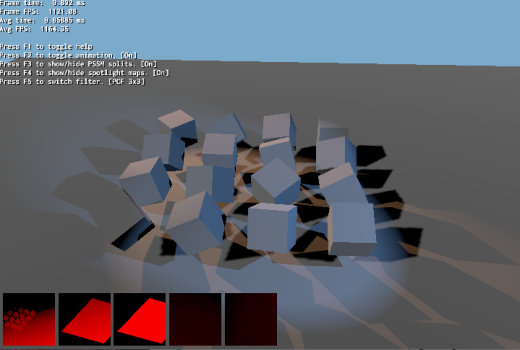

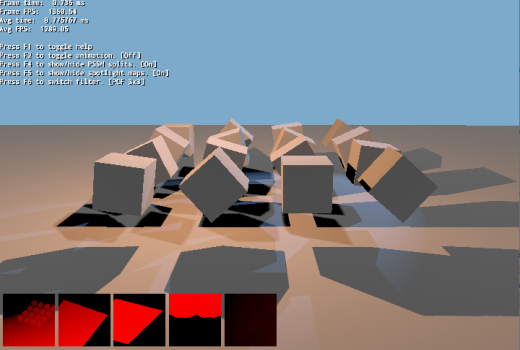

This sample demonstrates rendering the shadow map textures from a shadow node (compositor).

This is similar to 'Shadow Map Debugging' sample. The main difference is that the shadow nodes are being generated programmatically via ShadowNodeHelper::createShadowNodeWithSettings instead of relying on Compositor scripts.

This sample demonstrates the use of static/fixed shadow maps to increase performance.

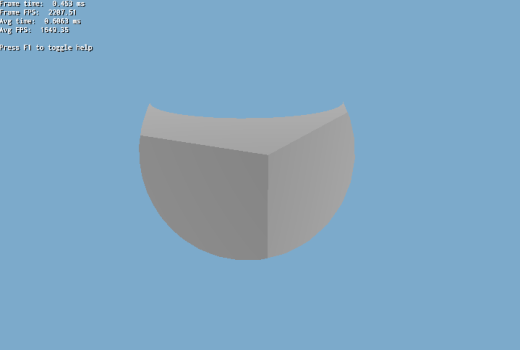

This sample demonstrates the use of stencil test. A sphere is drawn in one pass, filling the stencil with 1s. The next pass a cube is drawn, only drawing when stencil == 1.

This sample demonstrates stereo rendering.

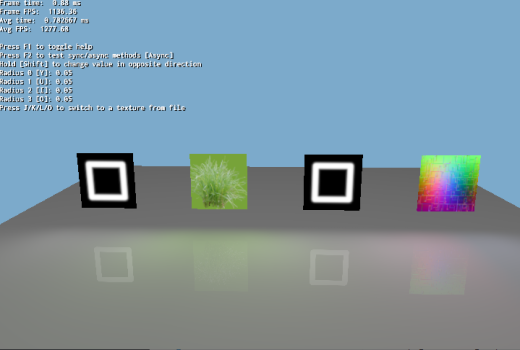

This sample demonstrates the creation of area light textures dynamically and individually. This can also be used for decals texture use.

This sample demonstrates the use of Ogre V1 objects (e.g. Entity) in Ogre Next.

This sample demonstrates the use of Ogre Next manual objects. This eases porting code from Ogre V1. For increased speed, see sample: 'Dynamic Geometry'.

This sample demonstrates converting Ogre V1 meshes to Ogre Next V2 format.

This tutorial demonstrates the basic setup of Ogre Next to render to a window. Uses hardcoded paths. See next tutorial to properly handle all OS's setup a render loop.

This tutorial demonstrates the setup of Ogre Next in a basis framework.

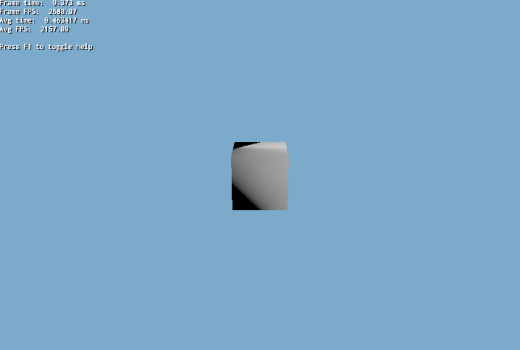

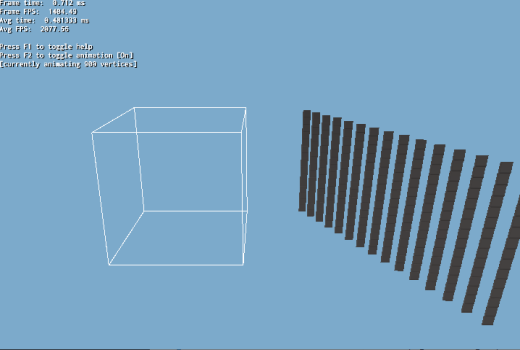

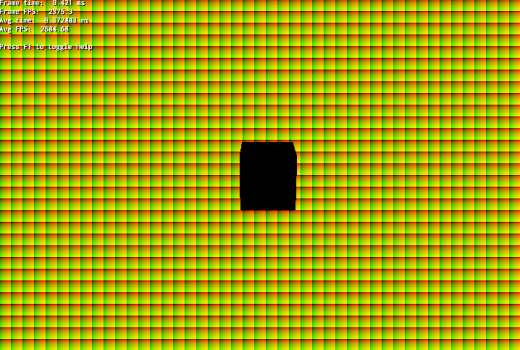

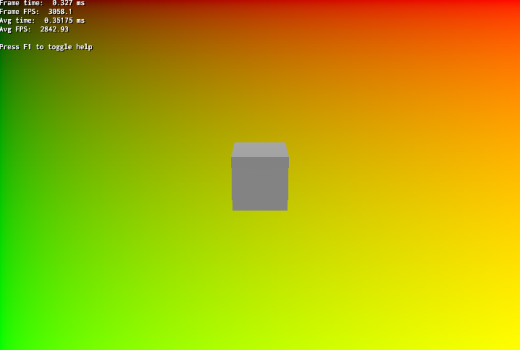

This tutorial demonstrates the most basic rendering loop: Variable framerate. Variable framerate means the application adapts to the current frame rendering performance and boosts or decreases the movement speed of objects to maintain the appearance that objects are moving at a constant velocity. Despite what it seems, this is the most basic form of updating. Progress through the tutorials for superior methods of updating the rendering loop. Note: The cube is black because there is no lighting.

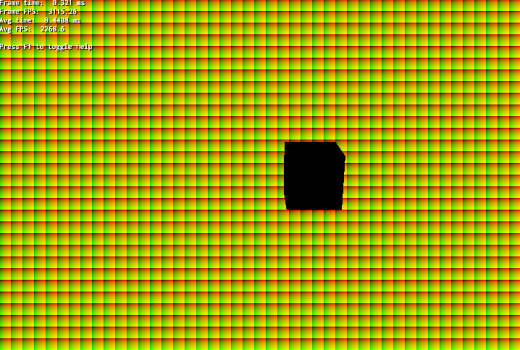

This is very similar to Tutorial 02, however it uses a fixed framerate instead of a variable one. There are many reasons to using a fixed framerate instead of a variable one:

This tutorial demonstrates combined fixed and variable framerate: Logic is executed at 25hz, while graphics are being rendered at a variable rate, interpolating between frames to achieve a smooth result. When OGRE or the GPU is taking too long, you will see a 'frame skip' effect, when the CPU is taking too long to process the Logic code, you will see a 'slow motion' effect. This combines the best of both worlds and is the recommended approach for serious game development. The only two disadvantages from this technique are:

This tutorial demonstrates how to setup two update loops: One for graphics, another for logic, each in its own thread. We don't render anything because we will now need to do a robust synchronization for creating, destroying and updating Entities. This is potentially too complex to show in just one tutorial step.

This tutorial demonstrates advanced multithreadingl. We introduce the 'GameEntity' structure which encapsulates a game object data. It contains its graphics (i.e. Entity and SceneNode) and its physics/logic data (a transform, the hkpEntity/btRigidBody pointers, etc). The GameEntityManager is responsible for telling the render thread to create the graphics and delays deleting the GameEntity until all threads are done using it.

This tutorial demonstrates how to setup and use UAV textures with compute shaders.

This tutorial demonstrates how to setup and use UAV buffers with compute shaders.

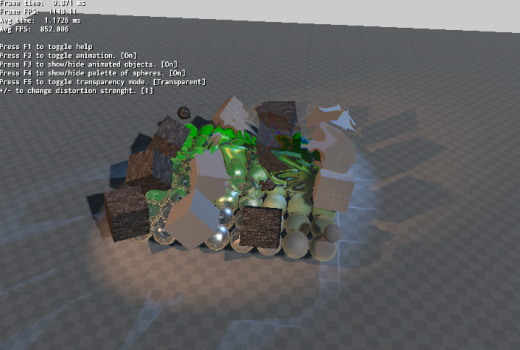

This tutorial demonstrates how to make compositing setup that renders different parts of the scene to different textures. Here we will render distortion pass to its own texture and use shader to compose the scene and distortion pass. Distortion setup can be used to create blastwave effects, mix with fire particle effects to get heat distortion etc. You can use this setup with all kind of objects but in this example we are using only textured simple spheres. For proper use, you should use particle systems to get better results.

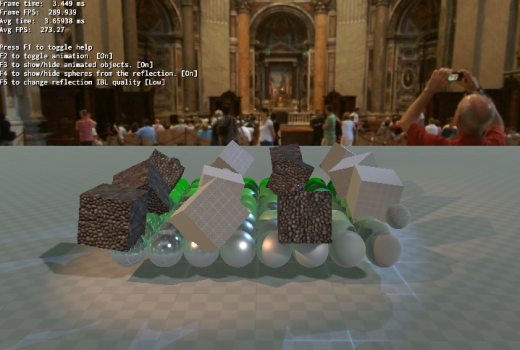

This tutorial demonstrates how to setup dynamic cubemapping via the compositor, so that it can be used for dynamic reflections.

This tutorial demonstrates how to run Ogre in EGL headless, which can be useful for running in a VM or in the Cloud.

This tutorial demonstrates the use of Open VR.

This tutorial demonstrates how to reconstruct the position from only the depth buffer in a very efficient way. This is very useful for Deferred Shading, SSAO, etc.

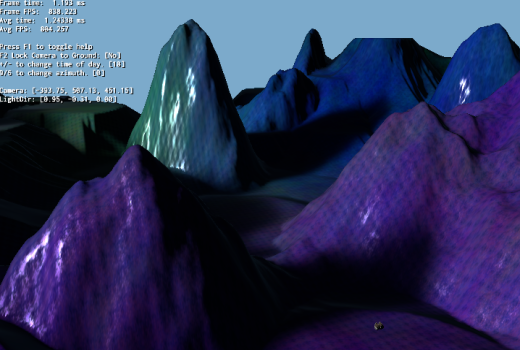

This tutorial demonstrates how to create a sky as simple postprocess effect.

This tutorial demonstrates SMAA.

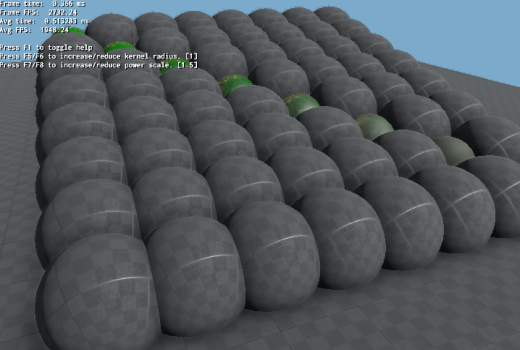

This tutorial demonstrates SSAO.

This tutorial is advanced and shows several things working together:

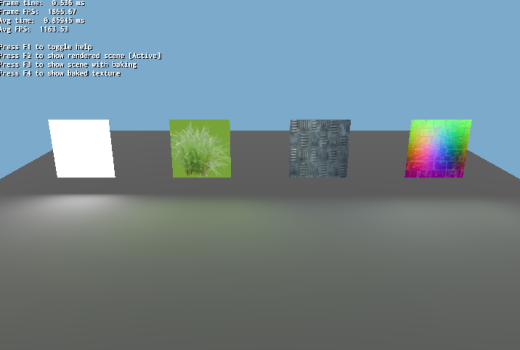

This tutorial demonstrates how to bake the render result of Ogre into a texture (e.g. for lightmaps).

This tutorial demonstrates how to setup an UAV (Unordered Access View). UAVs are complex and for advanced users, but they're very powerful and enable a whole new level of features and possibilities. This sample first fills an UAV with some data, then renders to screen sampling from it as a texture.

This sample is exactly as 'UAV Setup 1 Example', except that it shows reading from the UAVs as an UAV (e.g. use imageLoad) instead of using it as a texture for reading.